Apple is temporarily delaying the previously announced plan to scan users' devices for child sexual abuse material amid a widespread stream of criticism and privacy worries.

As explained by Apple in a website statement:

Based on feedback from customers, advocacy groups, researchers, and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.

Apple Faces Privacy Concerns

According to the iPhone maker, the tool scans phones, as well as cloud storage for child abuse imagery then reports 'flagged' owners to the police after being human-checked by an Apple employee.

This "Child Protections" feature, which was announced in August, was globally criticized by other tech giants and users think that this tool can be the start of 'infrastructure for surveillance and censorship.'

The head of WhatsApp, Will Cathcart, was among those who took a standoff and tweeted:

We’ve had personal computers for decades and there has never been a mandate to scan the private content of all desktops, laptops or phones globally for unlawful content. It’s not how technology built in free countries works.

— Will Cathcart (@wcathcart) August 6, 2021

Apple has backtracked its decision, a move that was welcomed by many users including whistleblower Edward Snowden:

I agree with this. @Apple must go further than merely "listening"—it must drop entirely its plans to put a backdoor into systems that provide vital protections to the public. Don't make us fight you for basic privacy rights.https://t.co/n4adiy6f0n

— Edward Snowden (@Snowden) September 4, 2021

Apple and Child Protection

The delay announcement didn't specify the kind of inputs Apple would be gathering nor how many months would the delay be affective. However, the iPhone maker has announced the introduction of new child safety features in three main areas:

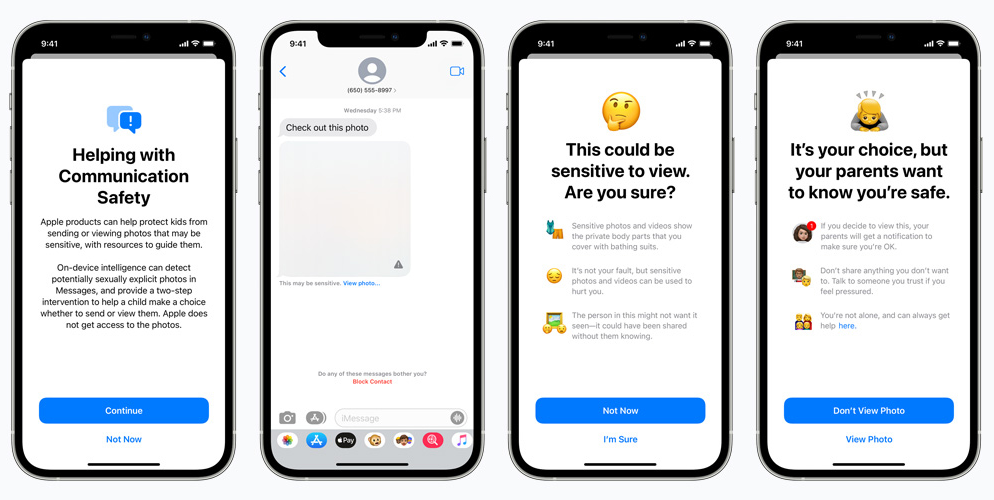

1. New communication tools that enable parents to watch their children's content.

2. New applications of cryptography in both iOS and iPadOS that will limit the spread of Child Sexual Abuse Material (CSAM) online.

3. Siri and Search will provide parents with any unsafe situations as well as intervening when users try to search for CSAM-related topics.

Messages will warn children and their parents when receiving or sending sexually explicit photos. Source: Apple