Leaps in mind-reading AI to revolutionize neuroscience, technology

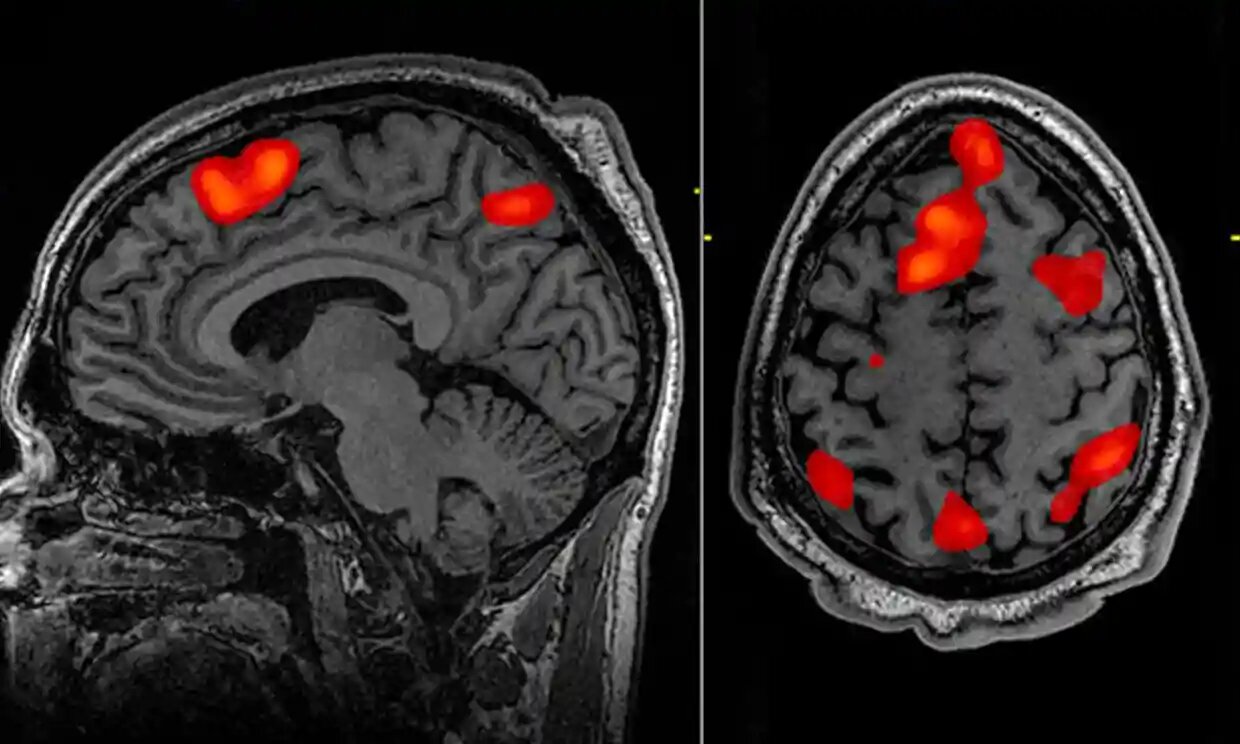

ALBAWABA – Scientists have developed an Artificial Intelligence-powered decoder that can translate brain activity into a continuous stream of text so that non-invasive mind-reading AI can read your thoughts with the help of using only fMRI scan data technology and hardware.

More so, the new technology allows the mind-reading AI to recreate the images you see.

Back in May, The Guardian reported a breakthrough in the AI-powered technology, which allowed decoders to accurately reconstruct speech as people listened to stories or even silently imagined them. This experiment was led by Dr Alexander Huth, at the University of Texas, along with his team in Austin, the United States (US), according to Agence France-Presse (AFP).

The mind-reading AI decoder could reconstruct speech using fMRI scan data. Photograph: John Graner

Later in August, Reuters reported another leap in the technology, which enabled decoders to recreate images people saw in an experiment that involved dozens of participants in Singapore. The scientists behind this breakthrough called it MinD-Vis.

Mind-reading AI that can decode your thoughts

According to Huth: “We were kind of shocked that it works as well as it does. I’ve been working on this for 15 years … so it was shocking and exciting when it finally did work.”

The advent of this technology overcomes a fundamental limitation of fMRI; the time lag.

While it can map brain activity to a specific location with incredibly high resolution, there is an inherent time lag, which makes tracking activity in real time impossible, The Guardian reported.

The lag exists because fMRI scans measure the blood flow response to brain activity, which peaks and returns to baseline over about 10 seconds. Even the most powerful scanner cannot improve on this. “It’s this noisy, sluggish proxy for neural activity,” said Huth.

In just a few seconds, the brain responds to natural speech with an explosion of data and information that is extremely difficult to track, using fMRI, and impossible to filter, according to Huth.

It gives a “mishmash of information” spread over a few seconds.

Mind-reading AI relies on language models such as ChatGPT and Bard AI - Shutterstock

However, the advent of large language models, such as the kind of AI underpinning OpenAI’s ChatGPT, tore a hole in the wall and pried a whole new way forward for scientists and innovators alike.

In short, these models are able to represent the semantic meaning of speech in numbers. This allows scientists to examine which exact patterns of neuronal activity corresponded with specific strings of words with a particular meaning rather than attempting to read out activity word by word.

The learning process was intensive, according to Huth.

Three volunteers were required to lie in a scanner for 16 hours each, listening to podcasts. The decoder was trained to match brain activity to meaning using a large language model, GPT-1, a precursor to ChatGPT.

Later, the same participants were scanned listening to a new story or imagining telling a story and the decoder was used to generate text from brain activity alone. About half the time, the text closely – and sometimes precisely – matched the intended meanings of the original words.

“Our system works at the level of ideas, semantics, meaning,” Huth said. “This is the reason why what we get out is not the exact words, it’s the gist.”

The participants also watched four short, silent videos while in the scanner. The decoder was able to use their brain activity to accurately describe some of the content, the paper in Nature Neuroscience said, as reported by AFP.

Mind-reading AI that can see what you see

Also in Singapore, the research team collected brain scan datasets of about 58 participants. The participants were exposed to between 1,200 and 5,000 different images of animals, food, buildings and human activities, while they received an MRI scan. Each session lasted for 9 seconds with a break in-between.

MinD-Vis, the mind-reading AI, then matches the brain scans with the images to generate an individual AI model for each participant.

It "read" thoughts and re-created visuals that people are looking at.

Various advancements may follow in mind-reading AI technology as the race intensifies - Freepik

"It can understand your brain activities just like ChatGPT understands the natural languages of humans. And then it will translate your brain activities into a language that the Stable Diffusion [an open source AI which generates images from text] can understand," said Jiaxin Qing, PhD student and one of the lead researchers on the study, from The Chinese University of Hong Kong.

According to Qing, the decoded images were consistently similar to what was shown to the participants.

What does this mean for mind-reading AI?

These are the first such effective, non-invasive instances of mind-reading technology ever to be developed, according to Reuters.

All previous language decoding systems required surgical implants to deliver similar results, while non-invasive tech could only decode single or small strings of words, with little-to-no accuracy, Huth said.

Notably, a breakthrough of such magnitude could be ground-breaking.

It outlines a pathway to restoring speech in patients struggling to communicate due to strokes or motor neurone diseases without invasive surgery or implants.

In the case of Qing’s experiment, "Say for some patients without motor ability. Maybe we can help him to control their robots (artificial limbs)... (or) communicate with others like just using their thoughts instead of speech if that person couldn't speak at that time,” said Chen Zijiao, at the National University of Singapore’s School of Medicine.

The technology could also be developed to integrate into virtual reality headsets, so users could control being in a metaverse with their minds instead of physical controllers, he added.

Ethical implications of mind-reading AI

The question as to whether mind-reading AI innovation can or will at any point be able to read the public’s minds is a very testy topic.

Apparently, we are years away from any such technology that could enable a mass mind-reading AI apparatus, the Singapore team told Reuters.

"We are trying to test the possibility right now, but I will say in terms of the dataset that is available right now, the computational power we have, as well as the huge heterogeneity or inter-individual differences in our brain anatomy as well as brain function; this is going to be very, very difficult," said Juan Helen Zhou, an associate professor at the National University of Singapore.

There is also the risk that the datasets learnt from the AI could be shared without consent, Reuters pointed out.

AI is used in a variety of domains and is now essential to decoding thoughts using mind-reading AI-powered technology - Shutterstock

So far, the relative lack of legislation in AI research could be a hindrance to progress.

"The privacy concerns are the first important thing and then people might be worried, whether the information we provided here might be assessed or shared without prior consent. So the thing to address this is we should have very strict guidelines, ethical and law in terms of how to protect the privacy," said Zhou.

University of Texas doctoral student and a co-author of the research paper Jerry Tang said: “We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that. We want to make sure people only use these types of technologies when they want to and that it helps them.”

Noteworthy as well is the fact that it was also possible for participants on whom the decoder had been trained to thwart the system, just by thinking of animals or quietly imagining another story, The Guardian reported.

Moving forward with mind-reading AI

A major challenge to the University of Texas experiment is that it was highly personalized. When the model was tested on other participants, the readouts were unintelligible.

Still, while these breakthroughs are “technically extremely impressive”, according to Prof Tim Behrens, a computational neuroscientist at the University of Oxford who was not involved in the work, they also open up a host of experimental possibilities. Such possibilities include and are not limited to reading people’s thoughts while dreaming or investigating how new ideas spring up from background brain activity.

“These generative models are letting you see what’s in the brain at a new level,” he told The Guardian. “It means you can really read out something deep from the fMRI.”

Mind-reading AI can can decode strings of words and entire stories in some cases - Freepik

The Texas university team are hopeful their technique could be applied to other, more portable brain-imaging systems, such as functional near-infrared spectroscopy (fNIRS).

More so, these findings are doubly significant as it comes at a time when major companies are investing billions trying to push the boundaries of science on the more invasive alternatives. One example on such companies is Elon Musk’s Neuralink.

Musk’s Neuralink is already valued at nearly $5 billion, as of June this year.

Progress on both frontiers, invasive and non-invasive tech, may ignite a race that coul polarize the various vested tech and scientific communities and bring in massive investments to fuel major leaps in the near future.